ARC Testing & Compliance

Introduction

The ARC program is a testing process designed to test RFID tags on the market in relation to application environments. Over time, this program has established a correlation between application environments and tag performance levels against which to test. These tag performance levels are identified per use case or application in order to create a Spec, which is then compared to a unique inlay profile or benchmark in the ARC database. By creating these benchmarks, Auburn University's RFID lab is ensuring tag manufacturers are producing tags with a certain level of quality and performance and, in turn, end users are purchasing tags with that ensured standard. The ARC process is for end users currently using or wanting to implement RFID.

The ARC program and resulting performance standards are so impactful because it is a vendor-neutral testing system or setup for testing RFID tags against end user’s application. Previously, each manufacturer created tags while adhering to EPCglobal and industry standards and tested internally without a reliable reference to end user’s requirement. If comparison tags from different manufacturers were tested, it was either done by the end-user or by a non-vendor agnostic manufacturer or company. ARC's testing and benchmarking methodology is one-of-a-kind in the RFID industry.

There are three main steps in the ARC Process:

Step 1: Pre-Pilot

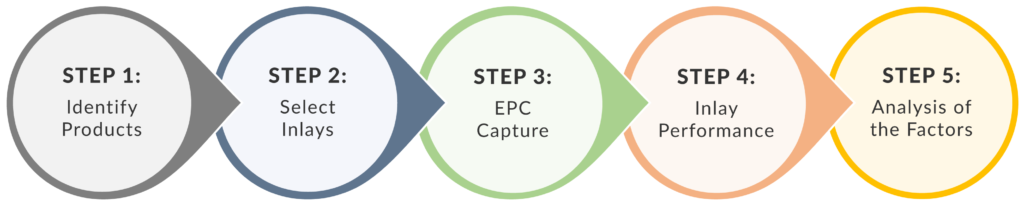

The Pre-Pilot Phase of the ARC program is essentially getting information from the end user about the deployment and taking all the steps leading up to testing or benchmarking the tags or inlays. The Pre-Pilot Methodology of the ARC Program is a five-step process.

Identifying the Products is the first step in the Pre-Pilot Phase and revolves around the end user and the products to be tagged. Not every type of product will be able to be tagged due to time constraints, but a specific set of product types are identified and then information is collected about the set. The information collected includes data like product type, packaging, location, display formats, materials, positions, and placements. From that information, a product layout map is created.

Next, a set of benchmarked inlays with three levels of expected performance are chosen to tag the items in the deployment.

- 3 Tags expected to perform higher than average

- 3 Tags expected to perform average

- 3 Tags expected to perform lower than average

These tag performance levels are assumed to perform this way based on previous testing in similar environments and each inlay’s benchmarking procedure. One inlay is also chosen as the control. The same control inlay helps to detect inconsistencies during the testing. The control inlay is always used in similar environments and typically has lower than average performance in the use case.

The Data Capture stage involves reading the RFID tags in the environment and recording the data. The tags are attached to products. The way in which these tags are tested must match the use case as much as possible. If the use case already has hardware selected, the Data Capture stage would use the same items, including things like reader hardware, antenna hardware, reader software, distance, orientation, memory banks read, and tag sessions.

After the ARC testing is completed, the valid information and performance levels are calculated and inlays not producing a 100% return rate are identified. When all the information is returned, an analysis is done to identify any factors that had effects on the inlay performances. If there is a high failure rate in a specific testing scenario, the size of the sample is increased and retested in order to better understand the problem.

Step 2: Benchmarking

Benchmarking occurs when new inlays are released on the market, which is on average about 35 inlays per year. The ARC Program currently works with over 20 inlay suppliers who submit their inlays for ARC testing. While Auburn University's RFID lab releases all their testing procedures and methodology for inlays suppliers to replicate, a tag is only ARC certified when it has undergone the official benchmarking process at the ARC lab. End users rely on the ARC program to suggest inlays because the lab has a strict benchmarking procedure and is completely vendor neutral. As such, several manufacturers participate in the ARC program to achieve a certification to confirm the quality of their products and stand out in their markets.

When inlay manufacturers send their inlays in to be benchmarked, or ARC certified, the inlays are put through intensive testing. To start the process, a roll of 4,000 inlays is sent to the Auburn University RFID lab and each inlay is uniquely encoded.

Two main types of ARC tests are performed on the inlays – Standard tests and Custom tests.

- Standard tests create a base/general read and write profile for the inlay.

- Custom tests are completed based on the intended use case for the inlay. These custom tests are done in order to create a Use Case Profile for the inlay.

Standard and Custom tests are completed in two variations, a Single Inlay test, and a Multi-Inlay Proximity test. The Single Inlay test determines the effect of the medium on the inlay, while the Multi-Inlay Proximity test determines the effect of nearby inlays in addition to the medium. All ARC tests gather four measurement specifics:

- Read Sensitivity

- Associated Backscatter Signal Strength

- Write Sensitivity

- Associated Backscatter Signal Strength

While recording those four measurements, the inlays are tested at different variables on different test materials/mediums.

For a look at the variables and mediums, and the general benchmarking flow, checkout the testing process chart by clicking the picture below.

After the testing has been done, an Inlay Base Profile and Inlay Use Case Profile are created and sent to the appropriate outlets.

Step 3: Audit

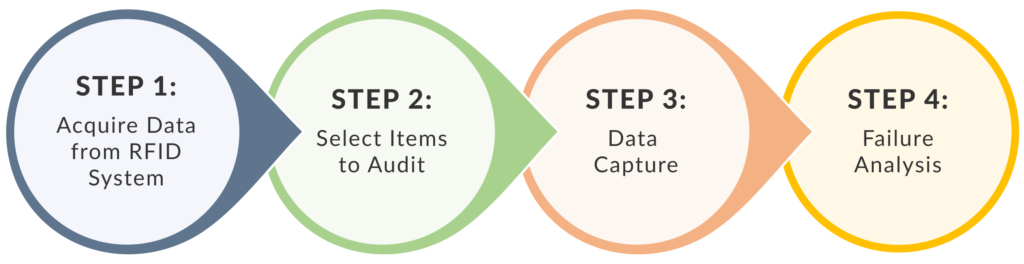

Audits can occur at any time depending on the performance of the RFID hardware and tags in the deployment. If an end user has an RFID deployment that is falling behind and not getting expected read rates, the ARC lab is able to perform an audit of the tags in order to identify any and all problems. The Audit is a four-step process which is laid out below.

The first step in the audit process is to retrieve a list of EPC numbers or SGTIN numbers as soon as the audit begins (verify your SGTIN-96 with Vulcan RFID Read & Write Software). It is critical no tags are added after the list of numbers has been gathered to ensure the data is not skewed. When the list is complete, the ARC team uses their own hardware to perform a cycle count of all the tags in the deployment. By having the ARC team conduct the audit in conjunction with independent hardware, they are able to possibly rule out or identify if the problem is related to the employees or hardware.

Step two is to decide what will be included in the audit and subject to the ARC test. It is not time efficient to audit all the tags/tagged items, so a subset is chosen in different locations and zones, if available. While creating the subset, a layout is also created of the space including furniture and fixtures for performance marking.

Step three and four are straightforward, data capture and failure analysis. Data capturing is collecting not only the EPC, but also the UPC, TID, and Inlay Type. Failure Analysis walks through all the audited items to find the root cause(s) of the problems. Some failures the ARC team look for are problems like damaged tags, double tags, wrong encoding, tag in the wrong location, or duplicate EPCs.

After the Failure Analysis has been completed, Auburn University's RFID lab creates a report documenting all identified failures, risks, and audit information. Audits are recommended to be completed regularly to prevent and or manage failures in a deployed system.

Conclusion

For more information about Auburn’s RFID Lab or about the ARC Programs – comment below or contact us!

If you would like to learn more about all things RFID, check out our website or our YouTube channel,

To learn more about RFID, check out the links below!